In this article I would like to show how to establish a gradle multi-module project configuration based on gradle 8.3. Browsing through the whole wide dustbin (uh, www was the correct abbreviation) gives you several solutions and some (if not most) of them are based on older versions of gradle and/or are written in groovy, but I want to use only the kotlin DSL.

Kotlin has some specifics in the configuration of gradle. One of those is the "internal" visiblity modifier. Classes and/or methods marked with this modifier are not visible outside of the current module additionally to the test-module. See kotlin Visibility Modifiers - Modules for further information about the definiton of modules. Due to the fact, that we do want to have integration tests using "internal" methods, we need some additional configuration, which is shown in this article as well.

Additionally to the small project provided by the gradle init action, we are going to add some useful tasks, which are necessary in most

mid-size and larger projects:

-

Integration Tests

-

Dokka Pages as well as an aggregation of those

-

Jacoco Reports (inclusive an aggregated report for all sub-projects)

-

Sonarqube Reporting

-

OWASP Dependency Check

-

Asciidoctor Documentation

In another article I am going to explain on how to release such a project using semantic versioning and github-actions using the above mentioned steps/tasks.

Gradle mandates the usage of "conventions" in the build-logic Directory. If you do use a gradle init, and generate a multi-module project, some of

those files are already generated automatically. In here we are going to use and extend those files, so that eg. integration tests are supported

as well.

Install gradle and initialize project

Like already mentioned, we are going to start with gradle init to generate a small multi-module project. Unfortunately, even though we are going

to use the gradle wrapper in out project, gradle forces us to install gradle itself, to initialize a project. Therefore the first step is to install

gradle on your local machine. This can be done via your local package manager (Yum, Pacman, Homebrew, Chocolatey, …) or manually via

Gradle Install.

After installing gradle, we are going to create a first small project via

gradle init --type kotlin-application --dsl kotlin --split-project --project-name kmp-template --package io.kmptemplate

For a full description of those parameters please refer to Gradle Build Init Plugin.

The above command-line will setup a small project with the type kotlin-application, which contains all required components for a kotlin command-line

application. Some sub-modules are generated via the split-project parameter. The project-name is usually taken from the name of the directory. To be able to be

independent of the current directory, we do provide the project-name (project-name). The used package is the one used for this example project

(package io.kmptemplate). All of those values can be adopted to your personal taste, obviously. The used dsl is kotlin, which will provide us

with *.gradle.kts files instead of the default groovy *.gradle files.

Since we are using the project-type kotlin-application, the pre-configured test-framework is kotlin.test and, quite obviously, the used language is kotlin.

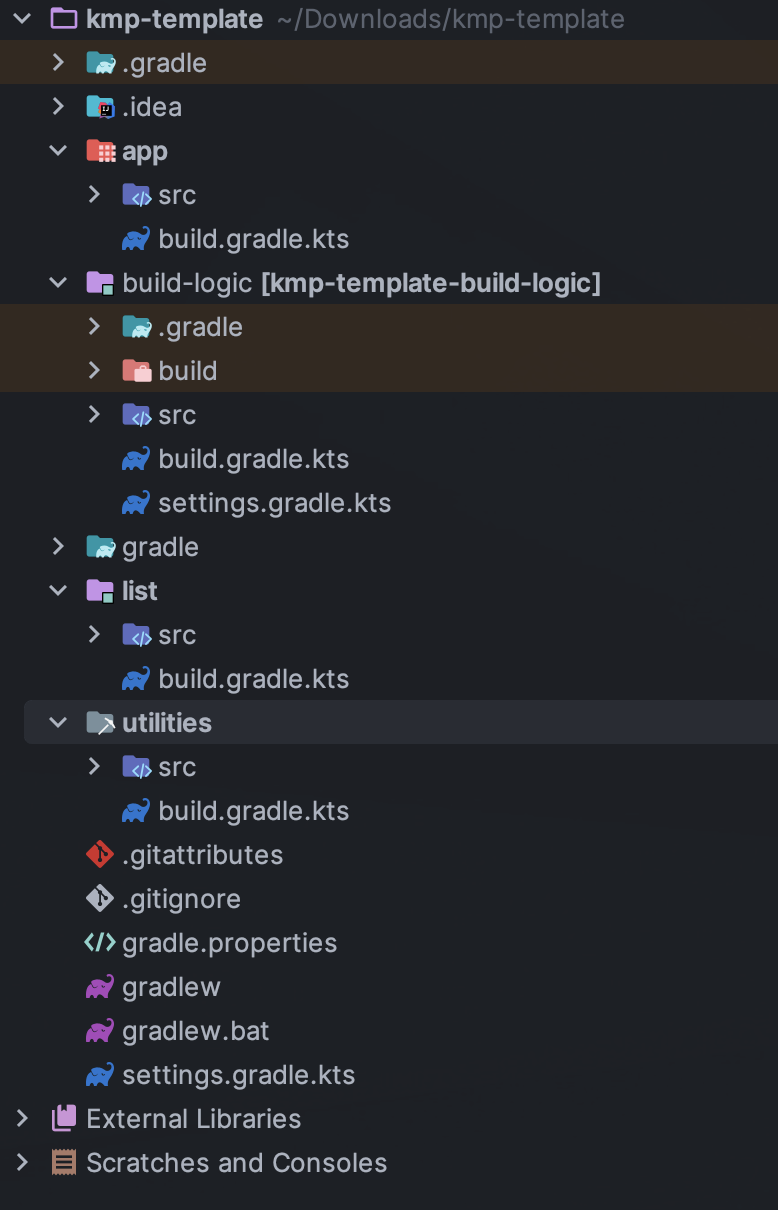

The following project structure is generated by the gradle init-command:

Please note the contents of the build-logic directory, which shows already three generated conventions. In the following steps, we are going to extend those files, to be able to fulfill our use-case.

After intializing the project, a first gradlew tasks will show a list of all available tasks.

change directory structure

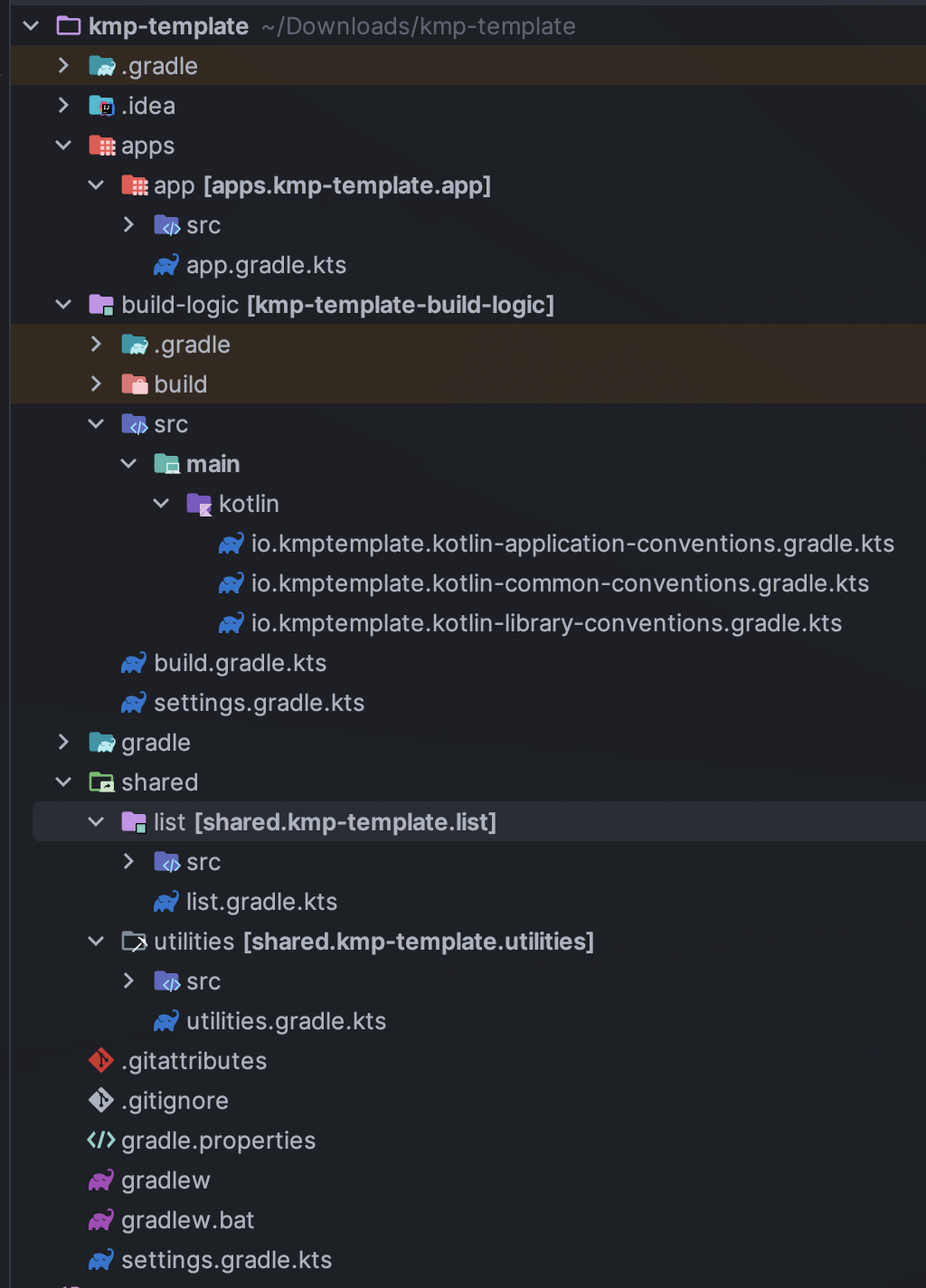

The new structure involves moving all applications (server, android, iOS, desktop, etc.) to the apps directory, and moving all shared sub-modules to the shared directory. To allow for automatic discovery of these modules, slight modifications are made to the settings.gradle.kts file.

fun includeProject(dir: File) {

println("Loading submodule \uD83D\uDCE6: ${dir.name}")

include(dir.name)

val prj = project(":${dir.name}")

prj.projectDir = dir

prj.buildFileName = "${dir.name}.gradle.kts"

require(prj.projectDir.isDirectory) { "Project '${prj.path} must have a ${prj.projectDir} directory" }

require(prj.buildFile.isFile) { "Project '${prj.path} must have a ${prj.buildFile} build script" }

}

fun includeProjectsInDir(dirName: String) {

file(dirName).listFilesOrdered { it.isDirectory }

.forEach { dir ->

includeProject(dir)

}

}

val projects = listOf("apps", "shared")

projects.forEach { includeProjectsInDir(it) }The above code will basically list all sub-directories of the given directory (modules in this case), and add those directories to the childprojects of the current

project (which is the root project). This is rather generic and adds the possiblity of easily add additional modules (even in different parent-direcotries) like eg. a documentation module.

Each project contains an own .gradle.kts file, which is named like the containing folder.

Please note, that the above mentioned functions are splitted, so that in a later phase we are able to add additional projects in a different directory (documentation).

Each sub-module should contain very little gradle configurations. Only module specifics, like eg. dependencies, should be included in there. All common tasks and configurations should therefor be defined in the convention files and/or dependencies of those.

add additional "useful" dependencies

To be able to write tests, useful logging and other stuff, we do need to provide additional dependencies. Some of those dependencies (like logging) are required in all sub-modules, so we do declare those in a central space, the convention.

I decided to add the following default dependencies for all kotlin sub-modules:

-

kotlin logging to make logging easier

-

assertk for Assertions in Tests

-

kotlin.test for Tests

The common-convention contains now all the above mentioned additional dependencies. To show off, that those dependencies are added to the modules, inside the list-module the Class LinkedListTest contains now statements from those dependencies.

The following code snippet shows the additions to the io.kmptemplate.kotlin-common-conventions.gradle.kts.

// Add additonal dependencies useful for development

implementation("io.github.microutils:kotlin-logging:2.0.4")

testImplementation("com.willowtreeapps.assertk:assertk-jvm:0.23")

testImplementation(kotlin("test"))

testImplementation(kotlin("test-junit5"))add Dokka generation

In kotlin, the documentation of classes and methods are generated using dokka (similar to javadoc). This documentation should be generated and aggregated in a common place, so that developers can refer to it. Usually dokka is generated for each sub-module, but not aggregated. Unfortunately the dokka plugin is not following the gradle idiomatic way, so the plugin needs to get handled in a different manner.

The Plugin can be found in the mavenCentral Repository and not, like other plugins, in the gradlePluginPortal(). That means, that we do need to add this repository to the settings.gradle.kts.

pluginManagement {

repositories {

gradlePluginPortal()

mavenCentral()

}

}It is quite important to add the classpath of this plugin to the build-logic/build.gradle.kts, to be able to provide a version, which cannot be done in the conventions-script itself. To be able to use a later kotlin-version (in this project, we are going to use 1.9.0), the transitive dependency on the kotlin stdlib is excluded from the dokka plugin.

implementation("org.jetbrains.dokka:dokka-gradle-plugin:1.9.0") {

exclude(group = "org.jetbrains.kotlin", module = "kotlin-stdlib-jdk8")

}The dokka plugin is then added to the Common-Convention to be able to use this plugin in each kotlin module.

plugins {

id("org.jetbrains.dokka")

}After applying those changes, the dokkaHtml-Task is available on all submodules. To show this, some dummy documentation was added to the

LinkedList-Class. The documentation is then generated in the build/dokka/html-Folder of each module.

To be able to aggregate the dokka-generated Documentation, we do need to add a new build.gradle.kts in the root-folder of the project. In this file the dokkaHtmlMultiModule-Task is declared.

plugins {

id("org.jetbrains.dokka")

}

repositories {

mavenCentral()

}

tasks.dokkaHtmlMultiModule.configure {

outputDirectory.set(buildDir.resolve("dokkaCustomMultiModuleOutput"))

}It is quite important to add the mavenCentral()-repository, because the dokka plugin tries to load some dependencies from this repository. By calling

the task dokkaHtmlMultiModule the dokka-Documentation of all modules is build and then aggregated in the build/dokkaCustomMultiModuleOutput

directory.

This step adds the following tasks to the project. Note especially the *MultiModule-Tasks, which uses the above mentioned configuration.

Documentation tasks

dokkaGfm - Generates documentation in GitHub flavored markdown format

dokkaGfmCollector - Generates documentation merging all subprojects 'dokkaGfm' tasks into one virtual module

dokkaGfmMultiModule - Runs all subprojects 'dokkaGfm' tasks and generates module navigation page

dokkaHtml - Generates documentation in 'html' format

dokkaHtmlCollector - Generates documentation merging all subprojects 'dokkaHtml' tasks into one virtual module

dokkaHtmlMultiModule - Runs all subprojects 'dokkaHtml' tasks and generates module navigation page

dokkaJavadoc - Generates documentation in 'javadoc' format

dokkaJavadocCollector - Generates documentation merging all subprojects 'dokkaJavadoc' tasks into one virtual module

dokkaJekyll - Generates documentation in Jekyll flavored markdown format

dokkaJekyllCollector - Generates documentation merging all subprojects 'dokkaJekyll' tasks into one virtual module

dokkaJekyllMultiModule - Runs all subprojects 'dokkaJekyll' tasks and generates module navigation page

javadoc - Generates Javadoc API documentation for the main source code.add Integration Tests

In this step, we are going to add the integrationTest-Task and the associated SourceSet (named testIntegration) to the project.

Like already mentioned, we are going to use conventions. To be able to show some nuts and bolts, we are also adding some additional

classes, so that we can show, that this task can also use classes marked with the internal visibility modifier.

The gradle Manual offered quite some help here. For a better readability of the project structure (meaning: for a better sorting of folders in the project structure), the 'integrationTest' sourceSet is renamed to 'testIntegration'. This will show the testIntegration-Source-directory after the test-folder, which will make the structure clearer IMHO.

To keep the project maintainable, the configuration of the Integration Tests is kept in two files, one referenced from the sub-modules, which are the producers of the configuration, and one for the consumer, which is the root-project. Those files are referenced in the corresponding conventions accordingly.

The file test-producer-conventions.gradle.kts

contains the configuration of the sourceset and the task. The visibility of the internal modifier is provided using the following

statement:

val koTarget: KotlinTarget = kotlin.target

koTarget.compilations.named("testIntegration") {

associateWith(target.compilations.named("main").get())

}According to the YouTrack-Issue KT-34102 IntelliJ IDEA is right now not able to recognize the above configuration. Therefore the InternalDummyClassTest in the testIntegration-Sourceset shows an error in IntelliJ, but compiles cleanly using gradle.

The consumer part of the configuration can be found in the file test-consumer-conventions.gradle.kts.

This configuration consumes the test-report-date, which is produced via the former configuration by all submodules, and aggregates the

test-reports. This is then done using the task testReport and is heavily based on

gradle Test-Reporting.

Just one line needs to get added to the "binaryTestResultElements"-Configuration (aka test-report-data), to be able to aggregate the testIntegration-Reports as well.

outgoing.artifact(testIntegrationTask.map { task -> task.getBinaryResultsDirectory().get() })On running the check-Task on the project, all Integration-Test are run, and a report is generated in the build/reports/allTests-Folder

which does contain the results of all Tests in the project.

add Jacoco

To get one of the most used metrics in Software development (Coverage), we do need to add jacoco to the project.

Like the dokka documentation, the jacoco Reports are generated per sub-module, and are then aggregated in the root of the project. We do need to add the Report generation, as well as the report aggregation into our small project. This is done using the conventions jacoco-producer and jacoco-consumer.

The aggregation of the report uses the same approach as the test-reports. The aggregation then produces xml, csv and html-reports to be able to use the reports in the Documentation as well as in the Sonarqube reporting.

add detekt

detekt is a kotlin specific code-analysis tool and can also be integrated into the sonarqube reports.

The following configuration is added to each sub-module and generates the detekt report for those.

detekt {

buildUponDefaultConfig = false

ignoreFailures = true

reports {

html.enabled = true

xml.enabled = true

txt.enabled = false

sarif.enabled = false

}

}Since the generated results should get aggregated as well, we do need to add some configuration into the root-project. This is done by using the aggregation-convention.

val aggregateDetektTask = tasks.register<Detekt>("aggregateDetekt") {

buildUponDefaultConfig = false

ignoreFailures = true

reports {

html.enabled = true

xml.enabled = true

txt.enabled = false

sarif.enabled = false

}

source(

subprojects.flatMap { subproject ->

subproject.tasks.filterIsInstance<Detekt>().map { task ->

task.source

}

}

)

}Please note, that the aggregation is really a full reporting for all sub-modules. Right now, it is not possible to generate an aggregation based on the results of each sub-module (see detekt github disucssion).

add sonarqube

Sonarqube is a Static Code Quality tool and offers a free instance or open-source projects on sonarcloud.io. To be able to use this, some configuration is necessary. This configuration uses some (eg. jacoco as well as detekt) of the previously described configurations.

sonar {

properties {

// See https://docs.sonarqube.org/display/SCAN/Analyzing+with+SonarQube+Scanner+for+Gradle#AnalyzingwithSonarQubeScannerforGradle-Configureanalysisproperties

property("sonar.sourceEncoding", "UTF-8")

property("sonar.projectName", rootProject.name)

property("sonar.projectKey", System.getenv()["SONAR_PROJECT_KEY"] ?: rootProject.name)

property("sonar.organization", System.getenv()["SONAR_ORGANIZATION"] ?: githubOrg)

property("sonar.projectVersion", rootProject.version.toString())

property("sonar.host.url", System.getenv()["SONAR_HOST_URL"] ?: "https://sonarcloud.io")

property("sonar.login", System.getenv()["SONAR_TOKEN"] ?: "")

property("sonar.scm.provider", "git")

property("sonar.links.homepage", githubProjectUrl)

property("sonar.links.ci", "$githubProjectUrl/actions")

property("sonar.links.scm", githubProjectUrl)

property("sonar.links.issue", "$githubProjectUrl/issues")

property(

"sonar.coverage.jacoco.xmlReportPaths",

layout.buildDirectory.file("reports/jacoco/aggregateJacocoTestReport/aggregateJacocoTestReport.xml")

.get().asFile.absolutePath

)

}

}To be able to fetch additional sub-module specific data (detekt) for sonarqube, in each sub-module additional configuration is required.

add documentation with asciidoc

Each project requires some documentation. This project uses asciidoc as the documentation source-language. Each documentation is added in the new documentation sub-module, which is added to the settings.gradle.kts.

includeProject(file("documentation"))To configure asciidoc a new producer convention is added (asciidoc-producer-conventions.gradle.kts). To be able to reference the current Revision Date and Number, some System-Environment-Variables are defined in this file.

val revDate = System.getenv()["revdate"] ?: LocalDateTime.now().format(DateTimeFormatter.ofPattern("yyyy-MM-dd"))

val revNumber = System.getenv()["revnumber"] ?: "DEV-Version"publish packages

The produced JAR-files are published during a release-process (documentated in a separate article) using github-actions. This is, because of the nature of this project, rather unnecessary, but to be able to show this process it is done anyways.

The packages are published to the github Repository using the maven-publishing plugin and is defined in maven-publish-conventions.gradle.kts.

The published packages include the produced JAR-files as well as the Sources-JAR-files.

package docker container

The docker image is stored in DockerHub and is build using github-actions.

Conclusion

This small post shows, that a kotlin project using gradle can be adopted quite easily to the extended requirements usually found in growing software projects. The usage of the kotlin-dsl can improve type-safty but on the other hand, does make some documentation, which can be found in the open, quite hard to adopt to a new project. But with conventions and the best-practices from the gradle-community the build stays out-of-the way of the developers while still being able to fulfill all needs.

The build-logic conventions do offer a great deal of flexibility but still provide some best-practices to a software project. My recommendation is to use this toolset.

If you do have larger projects, it could make sense to use own plugins to provide this funtionality, but for small to mid-size projects this approach seems to be

a best fit.

It is not planned to provide a full-blown plugin concept for this kind of configuration, if you would like to try out some quite opinonated plugin which provides nearly all of the above configuration, please try kordamp.org.